Hey crew,

OpenAI dropped GPT-5.2 this week and… people are not happy.

We've also got a new image model from OpenAI, Shopify's AI customers that test your store for you, and I finally went down the MCP rabbit hole (spoiler: my Claude employees can use tools now and it's a game changer).

Here's what matters this week for your work…

📌 TL;DR

GPT-5.2 dropped → Better benchmarks, worse vibes. Great for spreadsheets and deep analysis, but users are calling it over-censored and preachy. Opus 4.5 still my pick for creative work.

GPT Image 1.5 is live → Faster, cleaner text, transparent PNGs. Massive upgrade, but Nano Banana Pro still wins.

Shopify's AI customers → SimGym lets you A/B test your store with fake shoppers before real ones show up. Big unlock for small merchants.

Builder's notes → I finally dove into MCPs. My Claude employees can now use Perplexity, Notion, Reddit, n8n, and more.

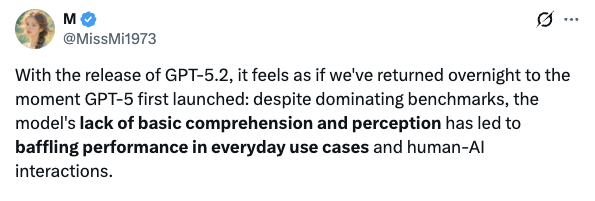

GPT-5.2 is here… and everyone hates it?

OpenAI just dropped GPT-5.2 in three flavours: Instant, Thinking, and Pro.

Don't let the small version bump fool you. On paper, this is a big upgrade: better coding benchmarks, improved vision, lower hallucination rates, and apparently it's really good at spreadsheets. It now sits alongside Gemini 3 Pro and Opus 4.5 (though I’d say not definitely better than any of them).

But benchmarks don't tell you how a model feels to use. And the early consensus is BRUTAL…

The main gripes: over-censorship (it's now the most censored model on the Sansa benchmark), tone-deaf responses that miss emotional context, and rigid, preachy outputs. As one user put it: "GPT-5.2 is proof that benchmarks can't be trusted."

It does seem to excel however, on deep, structured analytical work. Dan Shipper from Every had it review a full profit and loss statement. The model worked for two hours straight, checked every formula and individual expense, and delivered an accurate, well-structured summary.

For writing, it scored 74% on Spiral's 50-prompt test - trailing Opus 4.5's 80% but matching Sonnet 4.5, with fewer tired AI phrases like "It's not X, it's Y."

I have heard lots of people raving about it though. I say put it to your own tests and form your own opinions it.

I'll use 5.2 for day-to-day ChatGPT tasks. But Opus 4.5 remains my workhorse for anything requiring creativity, intelligence, or autonomy — it's the best model I've used, and I prefer it to both Gemini and GPT.

OpenAI released their new image model

OpenAI shipped GPT Image 1.5 this week. It's now live in ChatGPT for all users, including the free tier.

The improvements: better edit preservation, 4x faster generation, and they finally fixed that weird yellow tinge from the previous version.

So does it beat Nano Banana Pro? Not quite.

Community stress-tests show GPT Image 1.5 is a solid step up from where OpenAI was. But Google still holds the crown for most practical use cases.

Where GPT Image 1.5 wins:

Emotions and expressions. It renders tricky facial expressions like "nostalgia" or "relief" better than Nano Banana. Text rendering is cleaner too, with fewer misspellings on dense layouts. And it now generates transparent PNGs, which Nano Banana can't do.

Where Nano Banana Pro still wins:

Pretty much everything else. But particularly world knowledge. Google's model just knows more stuff. Celebrity likeness, spatial understanding (like turning a room photo into a floor plan), and technical diagrams. GPT image 1.5 also censors everything - I asked it to generate an image of a boxing scene and it wouldn’t do it.

The verdict:

For quick edits and casual image generation inside ChatGPT, GPT Image 1.5 is a useful upgrade. For serious creative work, Nano Banana Pro remains the better tool and all round better image gen model.

GPT Image 1.5 clears generation speed though - NBP is slow af.

Shopify's new AI customers will test your store before real ones do

Shopify dropped their Winter '26 Edition with 150+ new features. The standout is SimGym: AI customers that browse your store, complete tasks, and reveal optimisation spots. You can A/B test your website with zero live traffic. For small merchants without the volume for proper testing, this is a big unlock.

They also upgraded their AI assistant, “Sidekick”. It now monitors your store and surfaces personalised recommendations, builds automations from plain English ("tag customers who spend over $200"), edits themes via chat ("make this button rounded"), plus more.

They released this with one of the coolest landing pages i’ve ever seen.

💡 Builder’s notes

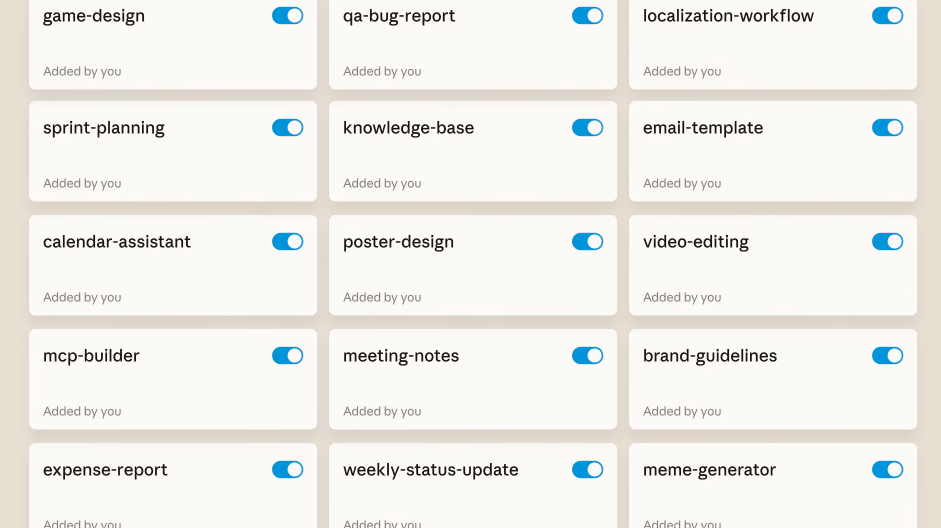

I’ve been diving into the world of MCP’s this week and f*ck me they are awesome.

MCP stands for Model Context Protocol. Think of it like USB for AI. Before USB, every device had its own weird plug and you needed a drawer full of adapters. USB standardised everything. One port so everything works. MCP does the same thing for AI tools. You have one protocol for thousands of integrations. If you want to learn more I linked a great video below in brain food.

And I know they’re not technically similar, but I like to think of MCP like an API call - it just makes it so your AI models like Claude can use tools like Notion, Reddit, Gmail, plus many many more.

I have AI employees built in Claude, contained as projects, with custom instructions outlining their role, goals, knowledge, etc, and then context added as project files (things like brand voice, ideal customer profile, etc), and then skills with my specialised knowledge and repeatable processes (how to write image prompts, how to write hooks, reporting frameworks). AND NOW MY AI EMPLOYEES CAN USE TOOLS!!

With MCPs, Claude can interact with other software the same way an API call would.

My AI employees now have:

Perplexity and Reddit MCPs for research

Notion MCPs to add and update things in my workspace

An n8n MCP to build automation workflows for me

And way more

Claude has always been dogshit at research compared to other models, so I gave Claude a Perplexity tool using MCP - so now I don’t need my Perplexity subscription, and I can do Perplexity research without even leaving Claude.

🧰 Tools to try

Skills MP → A marketplace for Claude Skills

MCP.so → A directory of MCP servers you can give your AI. Thousands of integrations; Notion, Slack, GitHub, you name it.

TrackWeight → Turn your MacBook's trackpad into a scale. Completely useless. Absolutely essential. Try it if you need to weigh something small and want to feel like a wizard.

🥣 Brain food

OpenAI dropped merch → website is baller. i’m going to become an OpenAI hypebeast.

Gamified fitness app idea → Someone mapped out a concept for turning workouts into a game using SAM 3. If you're into product thinking or fitness tech, worth a look.

Vibe coding beautiful websites → Great walkthrough using Gemini 3 + Claude Opus to build genuinely good-looking landing pages.

MCP In 26 Minutes → If my MCP explainer left you wanting more, this is the deep dive. Clear breakdown of how the whole protocol actually works.

I’m currently getting flamed on threads for my engagement bate 😂. I’ve found out the hard way that the art community HATES AI. pretty funny.

Anyways, go crush it this week. If you haven’t dabbled in MCP’s i’d block out 60 mins to set some up in Claude. You cant seem to use MCP’s in the Gemini or ChatGPT apps - this is another reason why Claude is superior.

If you use Notion, thats an easy win. You can now have Claude read any of your notion content, build you templates, add pages to your notion eg; “Add this content idea to my Content database”.

Enjoy,